Architecture Documentation

The world has a billion cameras. None of them can reason.

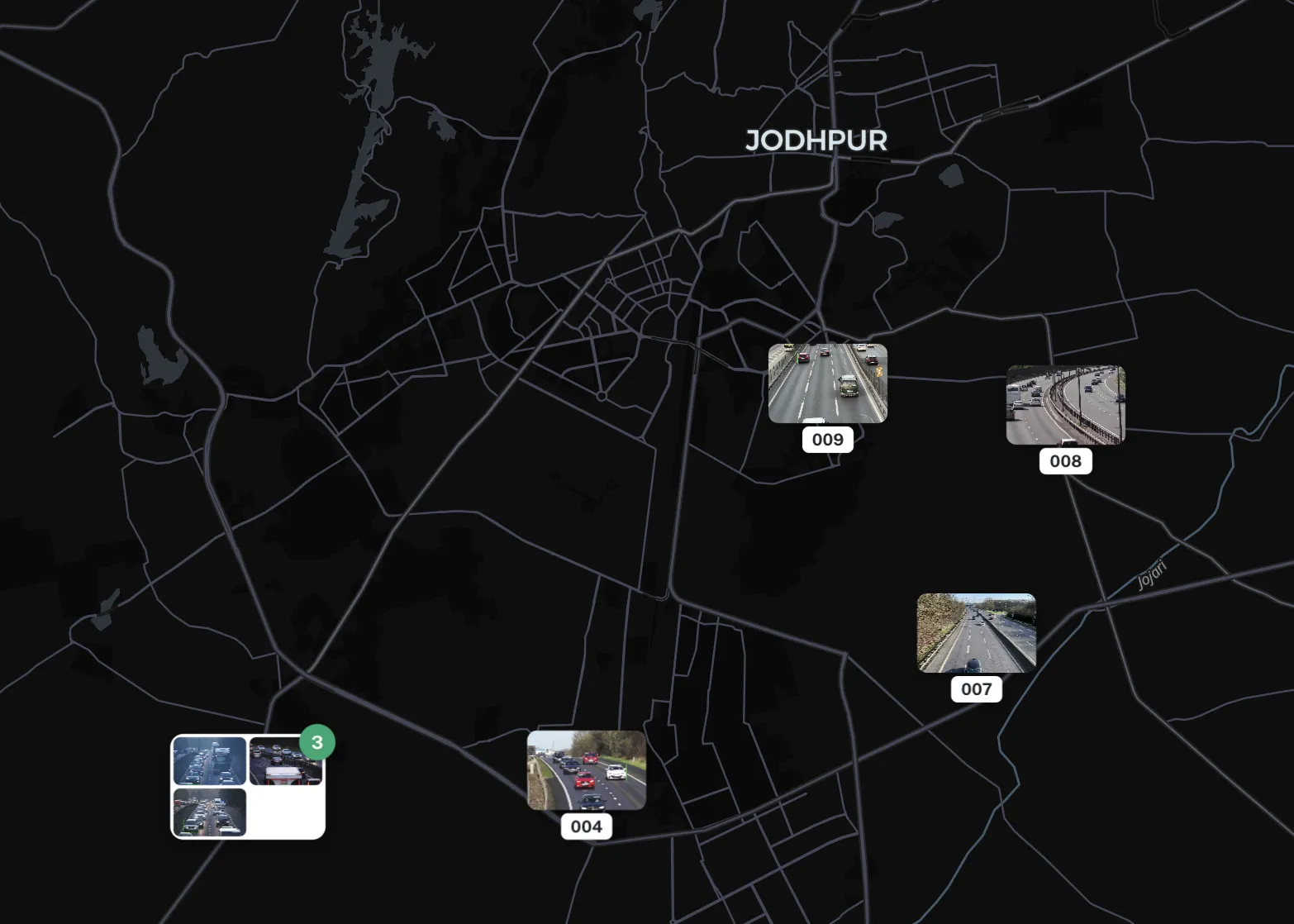

Drik processes 200+ camera feeds simultaneously on on-premises GPU infrastructure, generating legally admissible violation evidence and city-scale traffic intelligence in real time.

Reasoning Progress

Five Levels of Visual Reasoning

We measure our AI across five levels of visual understanding — from basic detection to predictive reasoning. Here's where we stand today.

Detect — It sees every object

Every vehicle, every person, every object — detected, classified, and counted in real time across every frame.

Recognize — It reads every plate, every face

License plates extracted. Vehicle makes and models identified. Colors, modifications, damage — all catalogued instantly.

Describe — It describes what it sees in words

Not just labels. Full natural-language descriptions of every scene, every event, every anomaly. Searchable. Queryable.

Reason — It understands cause and effect

Why did traffic stop? What caused the accident? Which vehicle triggered the chain reaction? The AI builds causal chains.

Predict — It anticipates what happens next

Pattern recognition across time and space. Congestion forecasts. Accident risk zones. Before it happens, the system sees it forming.

Product Universe

The Drik Ecosystem

All products are named after Sanskrit stars and cosmic concepts. Dhruva, the Pole Star, powers everything.

Dhruva

Core Engine LIVEPole Star — always present, always on

The foundational inference engine powering all products. SSM-based continuous video reasoning across 200+ camera feeds simultaneously.

Ashvinī

Traffic Intelligence LIVETwin Horsemen of dawn — swift justice

Traffic violation detection, ANPR, challan generation, and enforcement intelligence built on top of Dhruva.

Chitrā

Annotation Tool LIVEBrightest star Spica — precision in labeling

AI-assisted video annotation with active learning propagation. Open-sourced as DrikLabel.

Māyā

Synthetic Data IN DEVCosmic illusion — creates training worlds

Unreal Engine pipeline generating photorealistic synthetic training data with perfect ground truth labels.

Agni

Edge Deploy LIVESacred fire — forges edge engines

Edge deployment kit with TensorRT optimization, FP4/INT8 quantization, and OTA model updates.

Future Horizons

System Architecture

L1 Container Architecture

Six deployable containers running on-premises — from RTSP ingest to challan delivery, all on a single GPU server rack.

The L1 architecture consists of six deployable containers: Video Ingest Gateway (200+ RTSP cameras, frame sampler at 10 FPS, CUDA preprocessing), Dhruva Inference Cluster (YOLOv8+ detection, ByteTrack tracking, Vehicle ReID at batch size 32 with FP4 on 4x RTX PRO 5000), Event Engine (violation rules, evidence packager, challan generator), DrikNetra ANPR (multi-frame super-resolution, OCR, Vahan lookup), and output services (Dashboard, API Gateway, Alerts). Data stores include PostgreSQL with Redis, 100TB NAS Archive, and Prometheus with Grafana.

Under the Hood

Low-Level Architecture

Seven core modules power the Drik pipeline — from raw video ingestion to predictive intelligence.

Ingestion Layer

LIVEDetection Engine

LIVEMulti-Object Tracking

LIVELicense Plate Recognition

LIVEViolation Rule Engine

LIVEEvidence Pipeline

IN DEVCVWM (Vision World Model)

PLANNEDPerformance

330x Faster Than Naive

Five layers of optimization turn a 50ms-per-frame baseline into 0.15ms amortized — enough to handle 200+ cameras on a single GPU server.

Adaptive Sampling

200 cameras at 25 FPS = 5,000 frames/sec reduced to 2,000 via 10 FPS sampling. Motion-adaptive: static scenes drop to 2 FPS.

Dynamic Batching

Collate 32 frames from different cameras into a single GPU batch. GPU utilization jumps from ~20% to saturated.

FP4 Quantization

Native FP4 Tensor Core support on RTX PRO 5000. Mixed-precision: FP4 backbone + FP16 heads. Less than 2% accuracy loss.

TensorRT Compilation

Layer fusion (Conv+BN+ReLU), kernel auto-tuning, memory planning, and multi-stream execution.

Pipeline Parallelism

Three-stage pipeline on separate CUDA streams: preprocess, infer, and postprocess overlap in time.

End-to-End Latency Budget

Full path with ANPR + ReID — per violation event

How It Works

Your cameras. Our intelligence. Any scale.

Deploy Drik on-premise, in the cloud, or both. Our nodes scale with your camera network — from a single building to an entire city.

Open Source

Built in the Open

Our tools, models, datasets, and benchmarks are open-source. We build for the community and with the community.

DrikLabel

toolsAI-assisted video annotation tool with active learning propagation.

TypeScriptDrikSynth

toolsUnreal Engine based synthetic video data generator for training and validation.

C++ / PythonDrikNetra

modelsMulti-frame license plate super-resolution from video clips.

Pythondrik-bench

benchmarksBenchmark suite for evaluating AI models on Indian traffic scenarios.

Pythonindian-plate-dataset

datasetsDiverse Indian license plate dataset with varied formats, fonts, and languages.

Datasettraffic-chaos-100

datasets100 challenging Indian traffic scenes for model evaluation.

Datasetdrik-track

modelsVehicle tracking toolkit optimized for Indian traffic conditions.

Pythondrik-detect

modelsDetection model configs and weights for Indian traffic objects.

PythonStack

Built With

From GPU kernels to dashboards — the full production and training stack.